Among the flurry of Google Assistant updates presented on stage at I/O 2018, Google also announced an impressive upgrade to their TPU chips, once again pushing the limits for machine learning.

In 2017, Google benchmarks revealed TPUs are 15-30x faster than GPUs and CPUs. This year, the standards are even higher, as Google says the new Tensor Processor Units are eight times more powerful than last year’s chips.

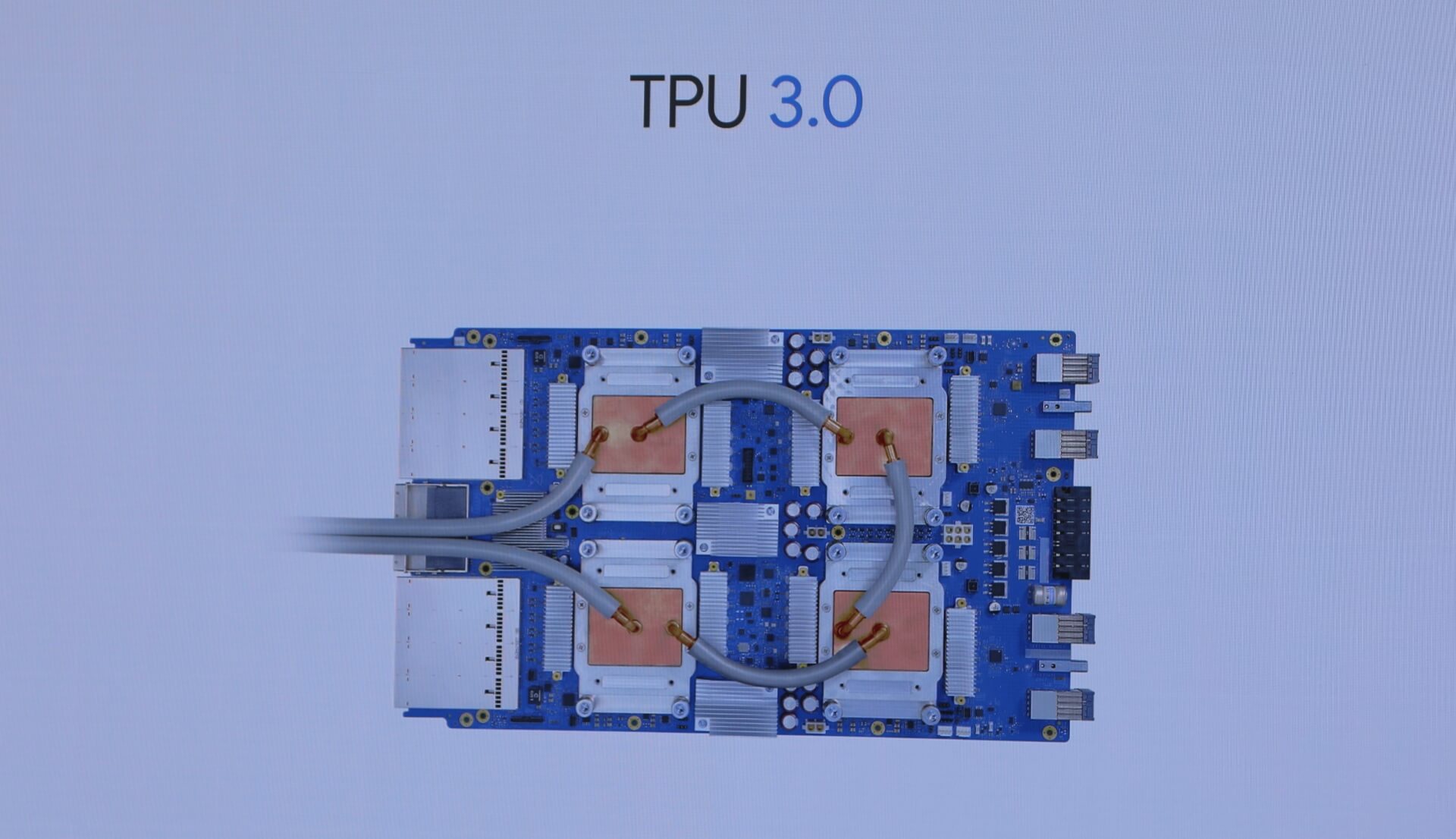

In fact, they’re so powerful that Google’s data centers now also feature liquid cooling for the first time.

Today we’re announcing our third generation of TPUs. Our latest liquid-cooled TPU Pod is more than 8X more powerful than last year’s, delivering more than 100 petaflops of ML hardware acceleration. #io18 pic.twitter.com/m8OH5vFw4g

— Google (@Google) May 8, 2018

It’s no surprise that Google, just like Amazon or Facebook, is racing to dominate the market of hardware targeted toward machine learning. Using these TPUs with TensorFlow on top of them, researchers, developers and big organizations can access high-end resources to create machine learning applications.

Just look at how this object detection API built on TensorFlow can quickly identify every component of an image!

Follow TechTheLead on Google News to get the news first.