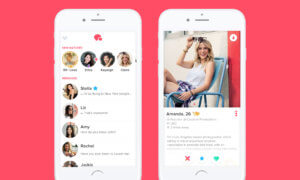

Body shaming may be happening on a regular basis in real life but online, at least, one dating app is putting a stop to it.

Bumble has stated their algorithms will now “flag language that can be deemed fat-phobic, ableist, racist, colorist, homophobic or transphobic. “

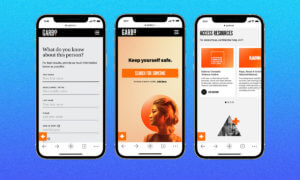

And if the AI hasn’t spotted a particular offensive comment or account, users can report it themselves.

By the way, all derogatory language spotted by the AI will be reviewed by human moderators every single time.

What will happen when an account will be flagged for improper behaviour?

For the first offense, the user will get a straightforward warning. If the incident repeats, the user could be banner permanently from the dating app.

This is just one of the features Bumble has implemented to enforce civilized behavior on its platform.

Last summer, unsolicited nude photos sent from one Bumble user to another were suddenly identified with 98% accuracy and blurred, a process that’s known to users as Private Detector.

Follow TechTheLead on Google News to get the news first.