UPDATE: Nvidia issued a response to Google’s TPU performance paper. Nvidia CEO Jensen Huang wrote on the Nvidia blog that accelerated computing is, indeed, the key for practical AI. The Kepler-generation GPU from 2009, while it became the first GPU to be used for neural network training by Dan Ciresan, a Romanian researcher at Professor Juergen Schmidhuber’s Swiss AI Lab, it wasn’t meant to solve a specific, AI-related task. Huang writes that only later generation GPU architecture, like Maxwell and Pascal, was designed with deep learning in mind. That said, their Tesla P40 Inferencing Accelerator (developed four years after the Kepler Tesla K80) “delivers 26x its (Kepler’s) deep-learning inferencing performance, far outstripping Moore’s law”. This means that if Google’s TPU offers 13x the inferencing performance of the Tesla K80, then the Tesla P40 is twice as fast as the Google TPU. Bazinga!

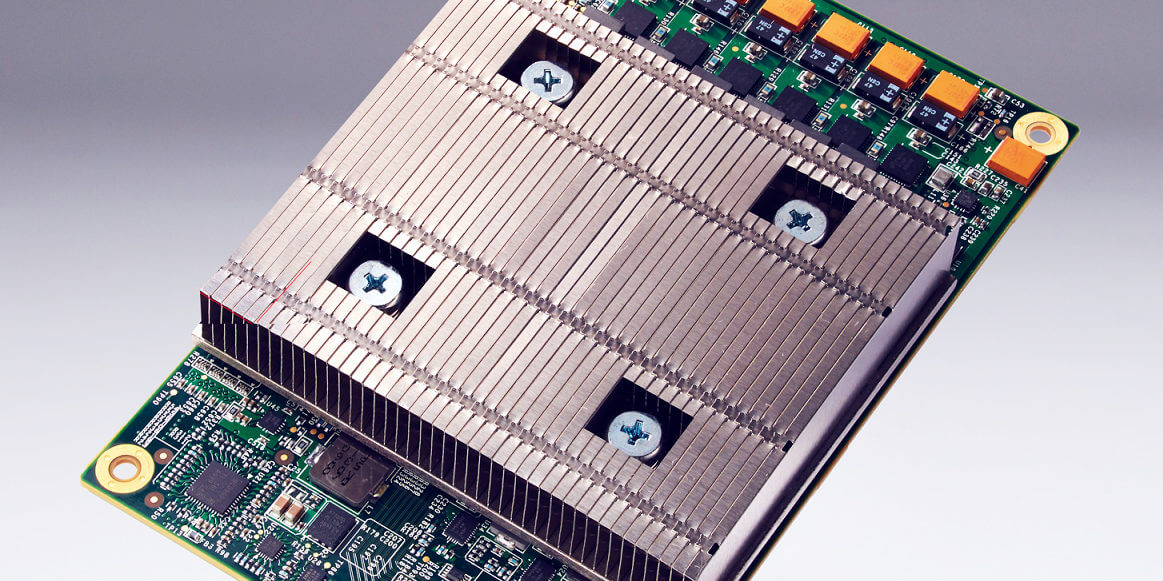

Google is advancing in machine learning faster than others, thanks (in part) to their custom chips. After briefly introducing the Tensor Processing Units (TPUs) last year, the team of researchers at Google described, in detail, the type of performance they get from the in-house processors. Let’s just say… it’s pretty impressive #hardwaremagic

Their findings show the TPUs are actually 15-30x faster than GPUs and CPUs. Before we elaborate further, there’s an important thing you need to know from the get-go: the results shown in the paper take into account a Google benchmark. So, this is Google’s way of seeing whether their custom chips have lived up to their expectations or not.

The graphics show that the chips that were optimized around the company’s own TensorFlow machine-learning framework are up to 30 times faster in processing Google’s machine learning algorithms than other standard GPU + CPU combos – Nvidia K80 + Intel Haswell. Moreover, the power consumption levels are worthy of note; Google’s TPU offer 30x to 80x higher TOPS/Watt.

Google has been working towards this goal since 2006. Back then, there weren’t many tasks that couldn’t be solved efficiently by a standard GPU/CPU combo. But as technology evolved and DNN (deep neural networks) became popular, Google realized it could no longer postpone this project. From an economical perspective, it wasn’t efficient anymore to use CPUs for this type of tasks. “Thus, we started a high-priority project to quickly produce a custom ASIC for inference (and bought off-the-shelf GPUs for training)”, wrote the team. Their initial objective was to “improve cost-performance by 10x over GPUs.”

Why the sudden need to spill the beans? Well, not only are the numbers encouraging and worthy of praise, but Google believes that more companies could be inspired by their efforts to “build successors that will raise the bar even higher.”

Follow TechTheLead on Google News to get the news first.