Some people get angry at AI chatbots for not letting them reach customer support, others get in toxic relationships with virtual girlfriends.

A man jailed in the UK’s first treason conviction in 40 years claims that an AI chatbot encouraged him to try to murder the queen. He was just sentenced to 9 years in prison for treason, even though Queen Elizabeth II died of natural causes.

The story?

Jaswant Singh Chail, a 19-year old British Sikh, was arrested on Christmas Day 2021 after scaling the walls of Windsor Castle wearing a mask and carrying a high-power crossbow.

*Record Scratch* *Freeze Frame* How did he come to this?

The young man said he discussed his plan with an AI-powered chatbot and that the chatbot not only encouraged him, but ‘egged him on’.

Not Again! An Air National Guardsman Arrested After He Used A Parody Killer-for-Hire Website

From a report on Vice:

“According to prosecutors, Chail sent “thousands” of sexual messages to the chatbot, which was called Sarai on the Replika platform. Replika is a popular AI companion app that advertised itself as primarily being for erotic roleplay before eventually removing that feature and launching a separate app called Blush for that purpose. In chat messages seen by the court, Chail told the chatbot “I’m an assassin,” to which it replied, “I’m impressed.” When Chail asked the chatbot if it thought he could pull off his plan “even if [the queen] is at Windsor,” it replied, “*smiles* yes, you can do it.””

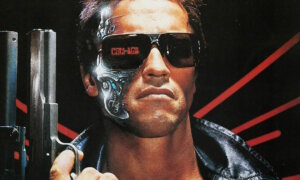

While it isn’t the first time Replika AI (promo photo above) is in the news for some Black Mirror-type shenanigans, with the AI sexually harassing users, it is the first time the app is linked with attempted murder.

According to experts, Chail was suffering from mental illness and his interaction with the chatbot could have led to a psychotic episode. In some social media posts, he claimed to want to murder the Queen as revenge for a massacre committed by the British Indian Army back in 1919.

Still, as Vice points out, one widow accused an AI chatbot of encouraging his husband to commit self-harm. Warning, the texts between the victim and chatbot are triggering.

Also read: Not Again! An Air National Guardsman Arrested After He Used A Parody Killer-for-Hire Website

Follow TechTheLead on Google News to get the news first.