Motion sensing and gesture recognition are things we can already rely on computers. But connecting the dots is something technology has been able to do… yet. Researchers from Carnegie Mellon University believe they have found a way to enable computers to understand body language – in real time, nonetheless #softwaremagic

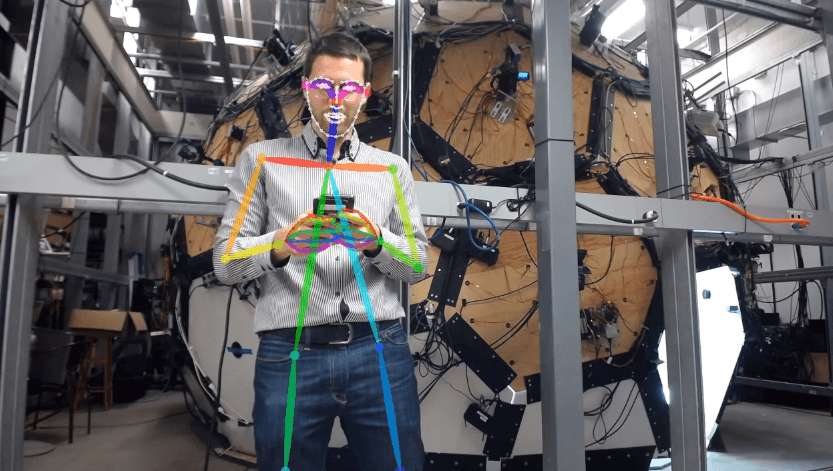

The team created a new computer system that can recognize a person’s pose and ‘a group’s actions. And when we say movements, we’re saying facial expression and finger movements, too. To do that in real time though, they needed heavy gear. So, the scientists used a dome filled with 500 video cameras. The Panoptic Studio was able to take in every detail, although they believe a single camera and a laptop can do the job as well.

To prove the utility and versatility of the system, the Carnegie team put their project on Github. In this way, developers can learn and add to the research done already, advancing the tech further.

The possibilities for such a system are endless. A software that can interpret body language would be of enormous help in diagnosing and even treating autism or depression, among other behavioral disorders. On the other hand, such a system would make a huge difference in the automotive industry.

It could offer valuable insight for self-driving vehicles. Cars would learn how to handle pedestrians better, by analyzing their movements and “predicting” their decisions.

Follow TechTheLead on Google News to get the news first.