Twitter is taking hate speech and hateful conduct in general across its platform more and more seriously in the recent weeks and seems dead set on fulfilling its promise to making the platform a safer place for everyone.

Recently, it has updated its rules on hate speech to ban dehumanizing language that involves religion.

“Our primary focus is on addressing the risks of offline harm, and research shows that dehumanizing language increases that risk.” Twitter said in a blog post that detailed the change. “As a result, after months of conversations and feedback from the public, external experts and our own teams, we’re expanding our rules against hateful conduct to include language that dehumanizes others on the basis of religion.”

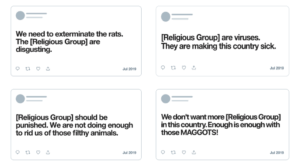

Twitter will now remove tweets that spread this particular type of hate from its platform. The company added an image to the blog post with examples of the types of comments and posts that will not be tolerated anymore, which you can see below.

Any such Tweets that were made before this rule was set in place will have to be removed but will not see the user’s account suspended, since they were written before the ban.

Twitter said that it decided to implement this rule after it received feedback from 8,000 Twitter users from 30 countries who pitched in with their ideas on how to further improve the platform and turn it into a safer place for everyone to enjoy.

Twitter To Attach Warning Labels Political Figures’ Tweets That Violate Platform Rules

Some of the themes that were encountered quite often in the feedback included, according to Twitter:

Clearer language – “Across languages, people believed the proposed change could be improved by providing more details, examples of violations, and explanations for when and how context is considered. We incorporated this feedback when refining this rule, and also made sure that we provided additional detail and clarity across all our rules.”

Narrow down what’s considered – “Respondents said that “identifiable groups” was too broad, and they should be allowed to engage with political groups, hate groups, and other non-marginalized groups with this type of language. Many people wanted to “call out hate groups in any way, any time, without fear.” In other instances, people wanted to be able to refer to fans, friends and followers in endearing terms, such as “kittens” and “monsters.”

Consistent enforcement – “Many people raised concerns about our ability to enforce our rules fairly and consistently, so we developed a longer, more in-depth training process with our teams to make sure they were better informed when reviewing reports. For this update it was especially important to spend time reviewing examples of what could potentially go against this rule, due to the shift we outlined earlier.”

These changes come at a time when religious minorities are being attacked more openly online – after all, it’s easy to hide behind an avatar, an username and the security of a screen.

However, social media is known to have played an important role in recent religion-oriented attacks – the killing spree in Christchurch, New Zealand still stands as one of the most prolific examples of this problem.

Australian Bill Punishes Social Networks For Failing To Remove Violent Content

Speaking strictly from an U.S point of view, the Anti-Defamation League found that 37% Americans have experienced some from of severe online hate and harassment in 2018.

A more recent survey stated that 35% of Muslims and 16% of Jews experienced online hate speech, based strictly on their religious affiliation.

Follow TechTheLead on Google News to get the news first.