Professor Parham Aarabi and graduate student Avishek Bose from U of T Engineering have managed to create an algorithm that disrupts facial recognition systems.

Their solution to going against the facial recognition software is a technique called adversarial training which consists of putting two AI algorithms against one another.

One algorithm basically does what facial recognition software is supposed to – identify faces, while the other actively works in disrupting that task. Therefore, the two algorithms are learning from one another and are in constant competition.

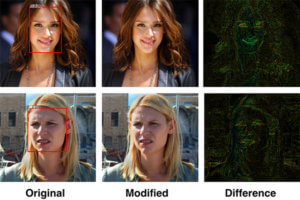

Their arms race is what creates the ‘privacy filter’ – an algorithm that alters specific pixels within the image that, although they’re not visible to the naked eye, the face detection AI has issues recognizing afterwards.

Credit: Avishek Bose

The system has been tested on the 300-W face dataset, which includes over 600 faces of different ethnicities, in different environments. The faces were 100 percent detectable at first, but the algorithm decreased that percentage to only 0.5 per cent.

Aarabi has said that “ten years ago these algorithms would have to be human defined, but now neural nets learn by themselves — you don’t need to supply them anything except training data.”

The new system is able to disrupt ethnicity estimation as well, alongside all other attributes that could be taken from the photos automatically.

The team plans to make the privacy filter available to the public via an app or a website.

Follow TechTheLead on Google News to get the news first.