No question about it, Google Assistant’s demo at the I/O conference impressed everyone and made Siri look seriously slow, but what about the actual numbers? Here they are, as Loup Ventures just pitted Siri, Google Assistant, Alexa, and Cortana against each other in a new test.

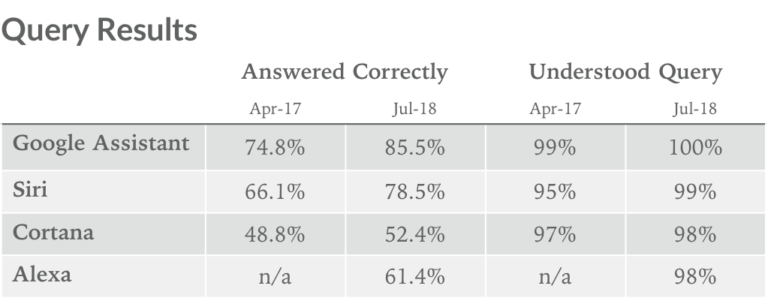

The experiment began in 2017 and consisted of 800 questions that both Apple and Google’s smart assistants had to offer, then comparing their success rate. In 2018, Siri understood 99% of the questions asked of her but only answered 78.5% of them correctly. However, it’s still a massive improvement since last year’s test, when Siri only correctly responded to 66.1 percent of the questions asked.

To grade each digital assistant, Loup Ventures considered two metrics: “did the assistant understand the question?” and “did the assistant correctly reply” on five categories like commands, navigations, commerce and local. Now let’s look at the results!

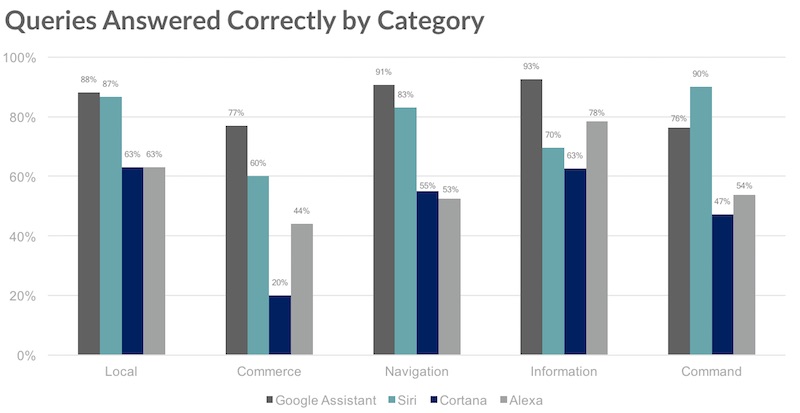

All in all, leader of the pack is Google Assistant, who answered 85.5% of the questions correctly, followed by Siri with 78.5 percent, Alexa with 61.4 percent and Cortana, with just 52.4%.

“Google Assistant has the edge in every category except Command. Siri’s lead over the Assistant in this category is odd, given they are both baked into the OS of the phone rather than living on a 3rd party app (as Cortana and Alexa do). We found Siri to be slightly more helpful and versatile (responding to more flexible language) in controlling your phone, smart home, music, etc. Our question set also includes a fair amount of music-related queries (the most common action for smart speakers). Apple, true to its roots, has ensured that Siri is capable with music on both mobile devices and smart speakers,” summed up a Loup Ventures representative.

If you were thinking about doing some shopping for a smart assistant, you can read the full report here.

Also read: ✍Siri And Alexa Can Be Hacked… And No One Would Notice✍

Follow TechTheLead on Google News to get the news first.