Google put its AI algorithm to the ultimate test: photo processing. Using Street View panoramas, it challenged the artificial intelligence to conjure up images that could pass up for professional photos taken by humans #machinemagic

The company bravely tested their AI’s capability of working like a human photographer – at least, in the post-processing phase. Google’s engineers used machine learning to train a deep neural network to use information available on Street View (Google Maps) to compile and edit breathtaking images. As opposed to previous tests, this one would take into consideration the machine’s ability to create something subjectively beautiful.

As such, the task was unique in its scope. So, the team used a different technique to tackle it, something they call generative adversarial network. Basically, they put two neural networks to work and use their different results in a way that benefits the process.

In this case, the AI that passed as photo editor had one mission – to fix badly-lit photos or ones that have been ruined by poor filters. The other model had to figure out which one was edited and which one was a true, professional photo. By doing so, the machine was able to understand what a “good” and “bad” photo looks like and, in return, learn how to edit raw pictures to fit the good criteria.

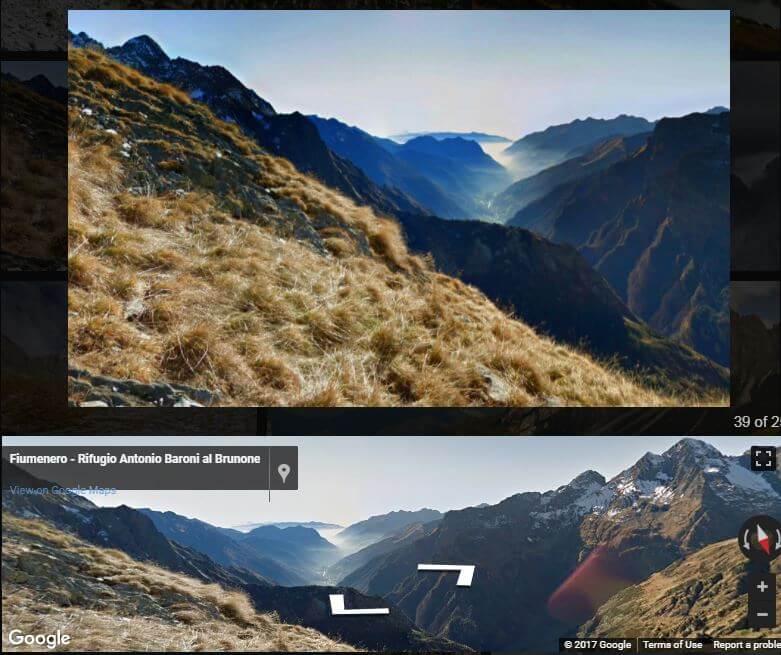

After the AI made use of that info to crop Street View panoramas and edit the crops accordingly, researchers invited human photographers to grade the pictures. They “forgot” to tell them that between the computer-generated pictures were photos captured by humans. In the end, about two pics out of every five got a score similar to the one a semi-pro or pro received.

Follow TechTheLead on Google News to get the news first.