We haven’t really seen any real success out of projects that aim to capture sign language and translate it so far – it’s no easy task by any means. Hand movements are subtle and often too quick for a machine to catch in real time. But a new project from the Google AI labs, might make a difference in the near future.

Google’s software uses machine learning to produce a ‘map’ of the hands and fingers via just a smartphone’s camera. It is made out of three AI models that work together: a palm detector (called BlazePalm) which recognizes the palm, hence knows where it’s at and that it doesn’t have to worry about tracking if anything else is going on with it.

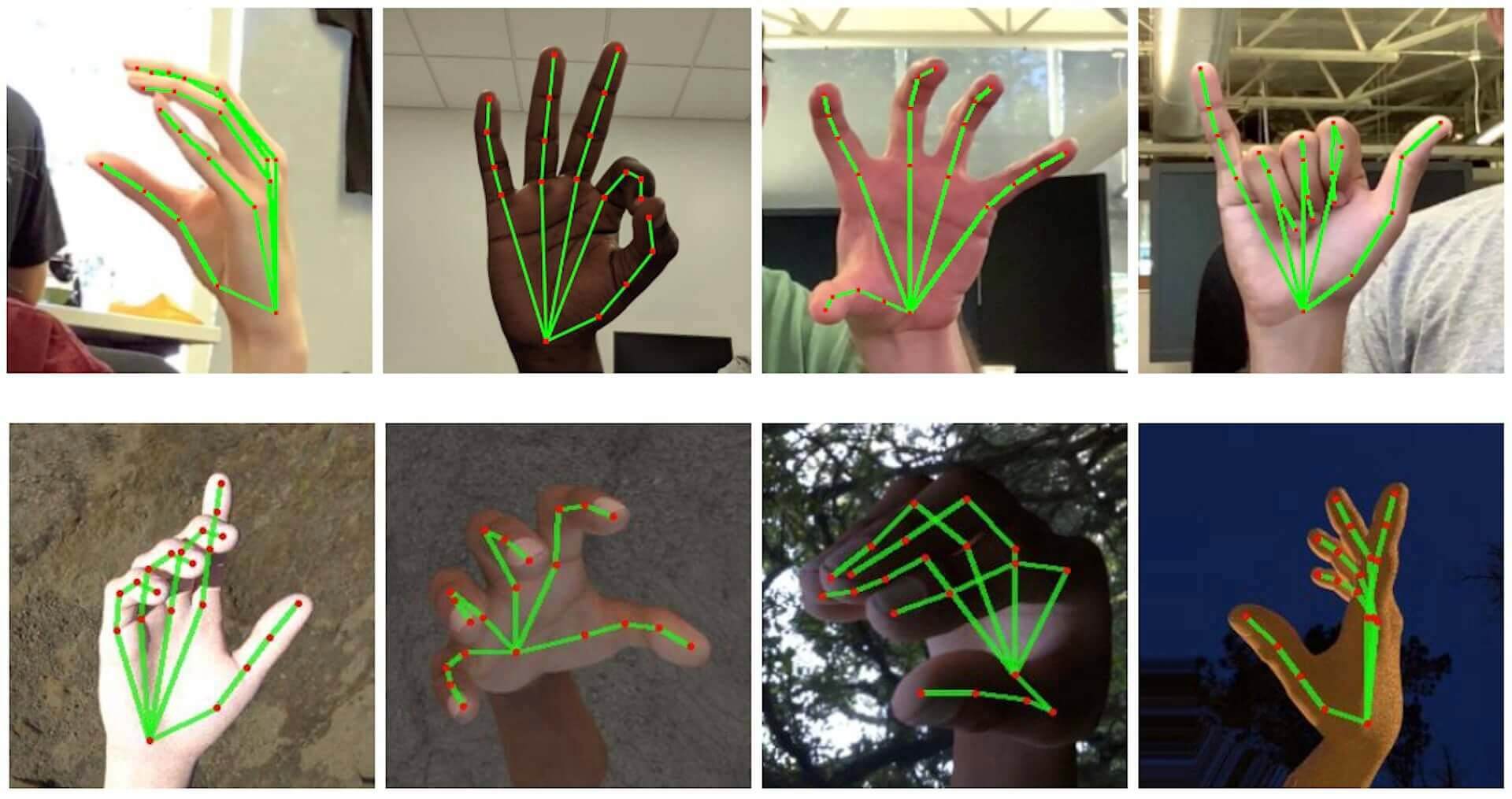

The second AI model is a hand landmark model, which looks at the cropped image that the BlazePalm detected and lastly, a gesture recognizer that classifies the previous configuration into gestures by assigning them with 21 coordinates which can tell how far away they are.

The team behind the system had to add those 21 points manually, to 30,000 images featuring hands in different types of lighting and poses in order for the machine learning system to understand what it’s looking at at any given time.

This work resulted in the system created by the Google AI Labs, which can run on a smartphone easily, within the MediaPipe framework.

“Whereas current state-of-the-art approaches rely primarily on powerful desktop environments for inference, our method achieves real-time performance on a mobile phone, and even scales to multiple hands,” esearchers Valentin Bazarevsky and Fan Zhang said in a blog post. “Robust real-time hand perception is a decidedly challenging computer vision task, as hands often occlude themselves or each other (e.g. finger/palm occlusions and hand shakes) and lack high contrast patterns.”

For the time being, the researchers didn’t add the software to any products, instead, they are giving it away for free and hope that others will take their work further and improve on it.

“We hope that providing this hand perception functionality to the wider research and development community will result in an emergence of creative use cases, stimulating new applications and new research avenues.” Bazarevsky and Zhang said.

Follow TechTheLead on Google News to get the news first.