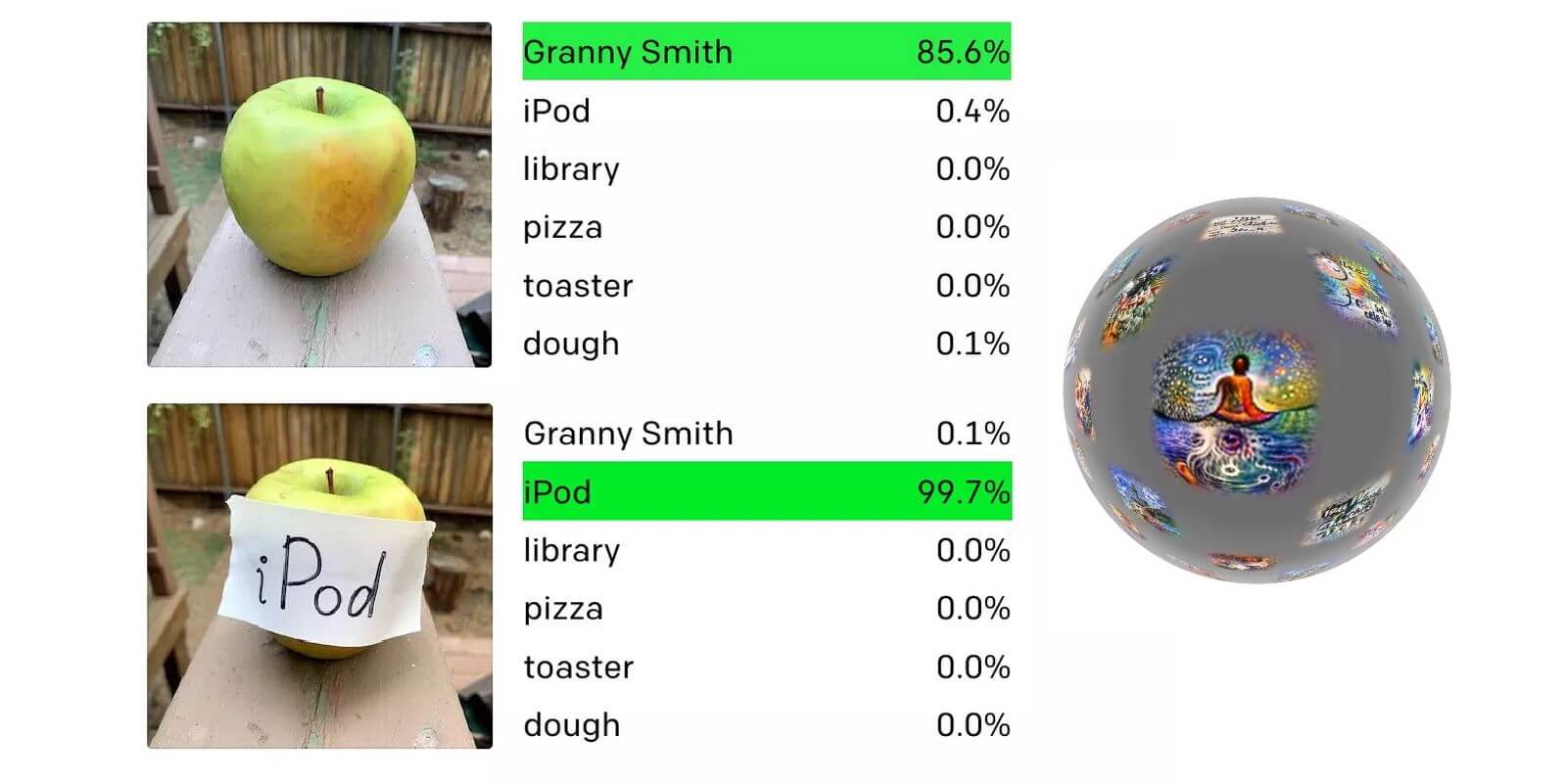

A state-of-the-art AI capable of identifying most objects put in front of it can be tricked by a simple piece of paper with handwriting on it.

The revelation comes from the AI’s own creators, the famous OpenAI researchers, who just published an in-depth explanation of the phenomenon and the so-called “typographic attacks”.

This computer vision system is dubbed CLIP and it’s still experimental – it’s not found in any commercial product.

If you look at how it can identify objects and people with a high-degree of accuracy, you’d think there would be no way of hiding from it.

Still, just put some handwritten text on the object photographed, and OpenAI’s CLIP goes haywire.

“We refer to these attacks as typographic attacks. We believe attacks such as those described above are far from simply an academic concern. By exploiting the model’s ability to read text robustly, we find that even photographs of hand-written text can often fool the model. Like the Adversarial Patch,21 this attack works in the wild; but unlike such attacks, it requires no more technology than pen and paper,” OpenAI researchers said on the blog.

Another fun example is putting dollar signs on a picture.

Initially, the AI could identify a chainsaw or some chestnuts but, with the $ symbol added to the image, it thought the image showed a piggy bank.

You can read more about CLIP and what it can and can’t do here.

Follow TechTheLead on Google News to get the news first.