This summer, the Defense Advanced Research Projects Agency (DARPA) will gather the world’s leading digital forensics experts to compete against each other in a race to create the most convincing AI-generated fake video.

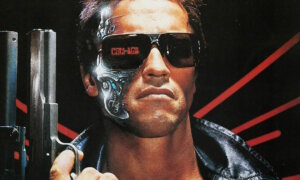

The contest includes the creation of what are known as ‘deepfakes’ – videos where another person’s face is superimposed on another person’s body.

DARPA is especially concerned about a new AI technique that could make these fakes almost impossible to detect. The technique uses generative adversarial networks (GANs), which enables it to create extremely realistic artificial imagery.

Above, actor and filmmaker Jordan Peele manipulates President Barack Obama’s features and body language to speak about fake news.

A GAN needs two things: an ‘actor’ and a ‘critic.’ The ‘actor’ learns the statistical patterns in a data set, like a set of images and videos and with that as its foundation, it generates realistic pieces of new data. The ‘critic’ attempts to distinguish between real and fake examples.

GANs, though relatively new, are designed to be smarter than an AI system, so whether any automated system at all could catch them is to be seen.

Now, detecting a digital forgery is a three-step project: The first is to examine the digital file for any signs of tampering, such as two images or videos that have been stitched together in an obvious manner. The second involves checking the lighting and other physical properties that might signal something is askew. The third is to consider logical inconsistencies such as wrong weather for that date or incorrect background for the location. This third step is the hardest to achieve with AI.

Deepfakes use a machine-learning technique known as deep learning to automatically incorporate faces into an already existing video. Large amounts of data are fed into a simulated neural network and from there on, the computer can learn to perform any tasks, even accurate face recognition. It’s that same approach that makes malicious video manipulation much too easy.

Anyone with modest technical knowledge who has the tool on hand can generate new deepfakes.

However, the problem of deepfakes extends beyond just innocent face-swapping; its existence implies the fact that soon enough, it will be hard to know if a photo, video, or audio clip was generated by a machine or not. Aviv Ovadya, chief technologist at the University of Michigan’s Center for Social Media Responsibility, fears that the newly developed technologies will be used to damage reputations or influence elections, and much worse.

At an event organized by Bloomberg on May 15th, Ovadya said:

“These technologies can be used in wonderful ways for entertainment, and also lots of very terrifying ways. You already have modified images being used to cause real violence across the developing world. That’s a real and present danger.”

AI is currently making the technology far more accessible, which means the digital forensic specialists are in an arms race. Hany Farid, a professor at Dartmouth who specializes in digital forensics, has made it clear: “It’s gone from state-sponsored actors and Hollywood to someone on Reddit. The urgency we feel now is in protecting democracy.”

Follow TechTheLead on Google News to get the news first.