Apple announced yesterday new communication safety features in Messenger, most of them aimed to protect children, features that Apple states will “evolve and expand over time”.

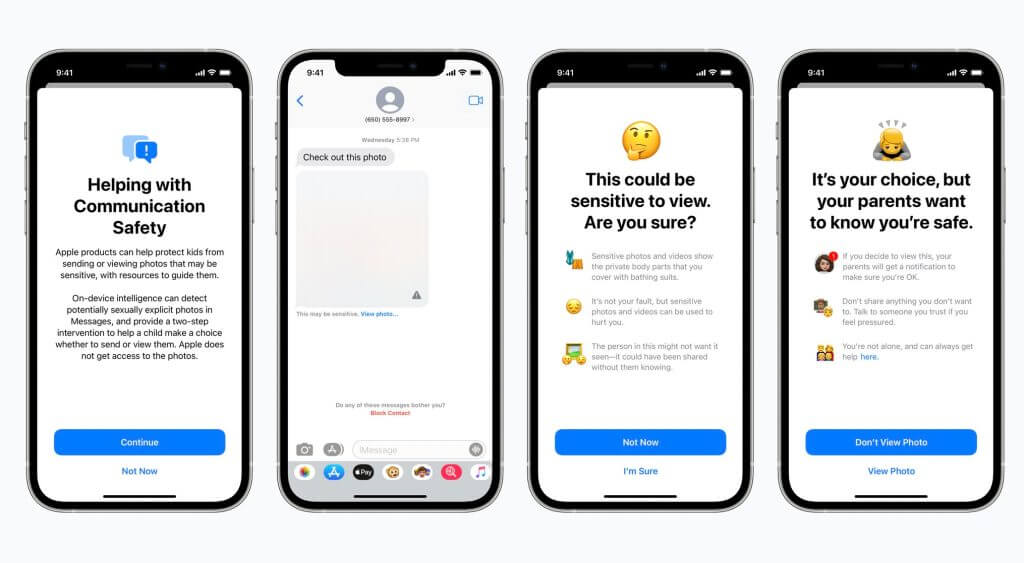

One of these features can be found in the Messages app: according to Apple, when a child that is part of an iCloud Family receives or attempts to send sexually explicit photos, they will be prompted with a warning message.

Messages uses on-device machine learning that analyzes the image attachments and determines if they contain sensitive content.

Also Read: Thorn: Fighting Against Child Sexual Abuse|”This Audacious Project is a Declaration of War Against One of Humanity’s Darkest Evils.”

When the child receives what iCloud believes to be a sexually explicit image, the app will blur it and display a warning message stating that the image “may be sensitive”. However, if the child taps on the “View Photo” option, it will be explained to them why exactly the image is believed to contain sensitive content.

At the same time, the iCloud Family parent will be receiving a notification. The same action will be taken if the child attempts to send/ends up sending a sexually explicit image, and they are under the age of 13.

Next, Apple has also announced that it is taking further steps to combat the spread of Child Sexual Abuse Material (CSAM) by detecting these sort of images when they are stored in iCloud Photos. If they are detected there, the company will report them to the National Center for Missing and Exploited Children.

As expected, all of this has sparked numerous debates online regarding user privacy. However, Apple insisted that the image analysis is done on device and that before an image is stored in iCloud Photos “an on-device matching process is performed for that image against the unreadable set of known CSAM hashes.

This matching process is powered by a cryptographic technology called private set intersection, which determines if there is a match without revealing the result. Private set intersection (PSI) allows Apple to learn if an image hash matches the known CSAM image hashes, without learning anything about image hashes that do not match. PSI also prevents the user from learning whether there was a match.”

For this, Apple employs a technology called threshold secret sharing, which ensures that the contents of so-called safety vouchers will not be interpreted by Apple as CSAM content, unless they cross a certain threshold, a threshold which Apple has not disclosed.

This threshold means the number of CSAM matches required before Apple manually reviews the report and confirms or denies if it was correct. This will ensure that accounts will not be flagged incorrectly and, according to Apple, the system has a very low error rate of less than 1 in 1 trillion accounts per year.

Regardless of these safety nets, the company has received strong pushback against these features.

The Electronic Frontier Foundation has warned of the potential risks in a blog post where it stated:

“We’ve already seen this mission creep in action. One of the technologies originally built to scan and hash child sexual abuse imagery has been repurposed to create a database of “terrorist” content that companies can contribute to and access for the purpose of banning such content. The database, managed by the Global Internet Forum to Counter Terrorism (GIFCT), is troublingly without external oversight, despite calls from civil society. While it’s therefore impossible to know whether the database has overreached, we do know that platforms regularly flag critical content as “terrorism,” including documentation of violence and repression, counterspeech, art, and satire.”

In response to these comments, Apple has distributed an internal memo, which was obtained by 9to5Mac, written by Sebastien Marineau-Mes, software VP at Apple.

In it, Marineau-Mes recognizes that, while the features have received “many positive responses”, Apple is aware that there have been “misunderstandings” regarding how the features work and that the company believes these features are necessary in order to “protect children”.

In the memo, Marineau-Mes says that maintaining the safety of children “is such an important mission. In true Apple fashion, pursuing this goal has required deep cross-functional commitment, spanning Engineering, GA, HI, Legal, Product Marketing and PR. What we announced today is the product of this incredible collaboration, one that delivers tools to protect children, but also maintain Apple’s deep commitment to user privacy.”

He adds that Apple is aware some people are “worried about the implications” of these features but that it “will continue to explain and detail the features so people understand what we’ve built. And while a lot of hard work lays ahead to deliver the features in the next few months, I wanted to share this note that we received today from NCMEC. I found it incredibly motivating, and hope that you will as well.”

The memo was accompanied by a note from the National Center for Missing and Exploited Children, signed by executive director of strategic partnerships, Marita Rodriguez.

The note, in full, reads:

Team Apple,

I wanted to share a note of encouragement to say that everyone at NCMEC is SO PROUD of each of you and the incredible decisions you have made in the name of prioritizing child protection.

It’s been invigorating for our entire team to see (and play a small role in) what you unveiled today.

I know it’s been a long day and that many of you probably haven’t slept in 24 hours. We know that the days to come will be filled with the screeching voices of the minority.

Our voices will be louder.

Our commitment to lift up kids who have lived through the most unimaginable abuse and victimizations will be stronger.

During these long days and sleepless nights, I hope you take solace in knowing that because of you many thousands of sexually exploited victimized children will be rescued, and will get a chance at healing and the childhood they deserve.

Thank you for finding a path forward for child protection while preserving privacy.

Apple also announced that it will be rolling out the system on a country-by-country basis, in compliance with local laws.

Follow TechTheLead on Google News to get the news first.