Did you ever get your Facebook profile reported and had no way of logging in? Or were you on the other side of the fence, reporting offensive content on Facebook and wondering if the company will take action?

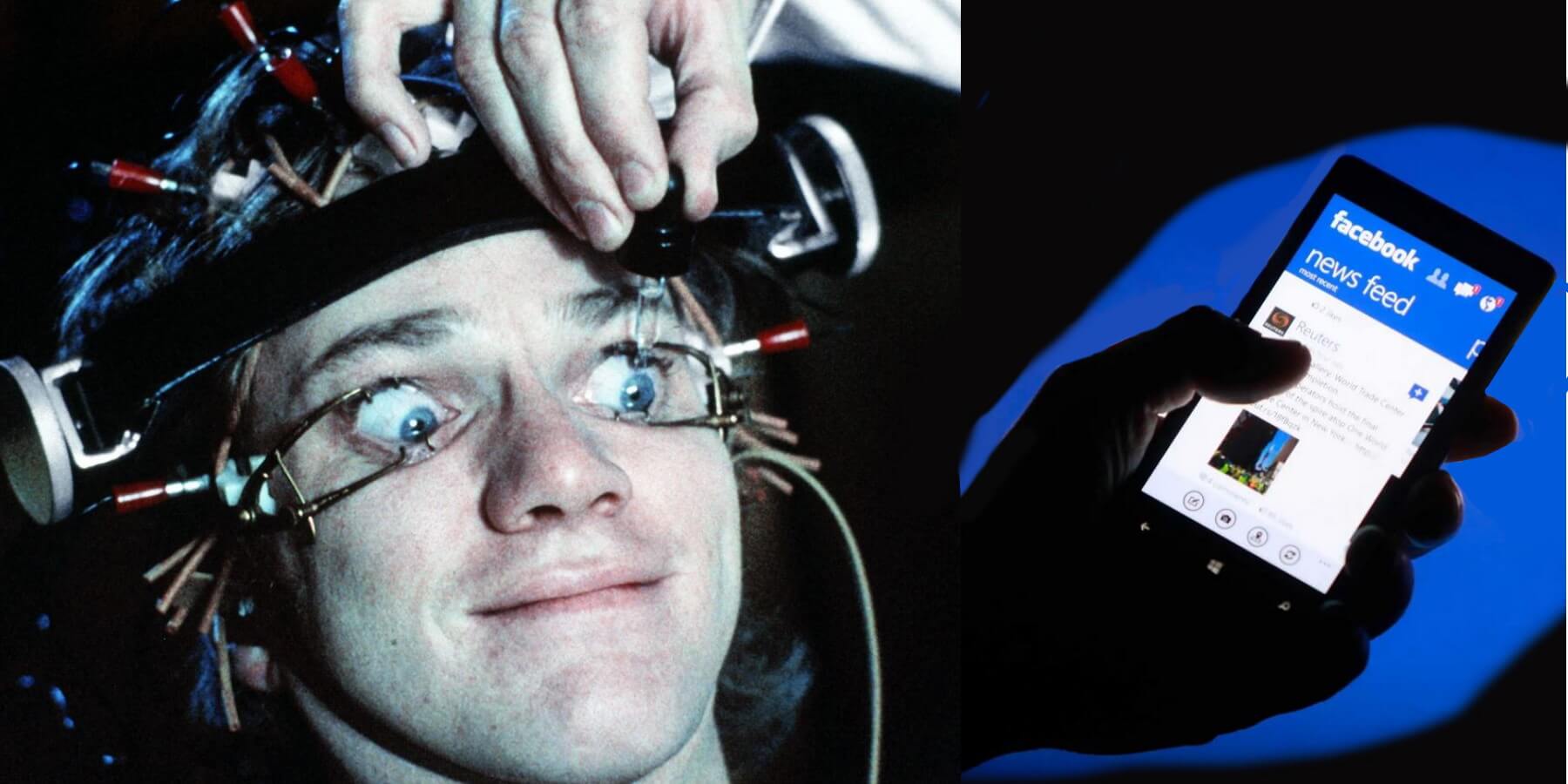

For most of these situations, it’s the job of Facebook moderators to curate your newsfeed and make sure that no offensive or graphic content makes its way in front of your eyeballs.

Here’s what they go through.

So, what does a Facebook moderator do?

Essentially, Facebook moderators are paid to review hundreds and thousands of Facebook posts, from photos to videos and regular posts in order to ensure the content users see is one in line with the Facebook terms of service.

According to a Wall Street Journal report, being a Facebook content moderator is “the worst job in technology” and, if you look at the type of content being moderated, you can see probably see why.

“Facebook employs about 15,000 content moderators directly or indirectly. If they have three million posts to moderate each day, that’s 200 per person: 25 each and every hour in an eight-hour shift. That’s under 150 seconds to decide if a post meets or violates community standards,” says Forbes, citing a report by NYU Stern.

The same report reveals that every single day, around 3 million posts, pictures and videos have either been flagged by AI or reported by users.

The same report also provides a diagram on how the decision to remove a post is made.

Facebook Will Hire 10,000 People in the EU to Work On ‘Metaverse’

How Facebook handles your feed

Some other sources peg the number of Facebook moderators to be much higher.

How much do Facebook moderators make?

Thanks to a troubling The Verge investigation, we know that in the US, full-time Facebook content moderators sometimes work for Cognizant, a services vendor, who call the job “process executive”.

Thanks to a troubling The Verge investigation, we know that in the US, full-time Facebook content moderators sometimes work for Cognizant, a services vendor, who call the job “process executive”. With an almost 1000 people workforce in the US Phoenix site, this is one of the largest Facebook moderation centers, where almost all employees work for Cognizant and are contracted out to Facebook.

That means that, despite working on Facebook and for Facebook, their salaries and benefits (or lack of) are handled by Cognizant. According to The Verge, moderators in Phoenix will make just $28,800 per year — while the average Facebook employee has a total compensation of $240,000.

“Gray can remember meeting only one Facebook employee during his nine months there. He was paid a basic rate of €12.98 per hour, with a 25 per cent shift bonus after 8pm, plus a travel allowance of €12 per night – the equivalent of about €25,000 to €32,000 per year. The average Facebook employee in Ireland earned €154,000 in 2017,” revealed an Irish Times report.

In Austin, Texas, Facebook moderators are contracted through Accenture, another similar company. There, some of the workers complained that, despite being exposed to some of the worst content on the Internet, they had no adequate mental support and were told to do “breathing exercises” to cope with the stress.

Content moderators contracted through Accenture in the U.S. make $16.50 per hour, with lower pay reported for those outside the country and Accenture making an estimated $50 per hour from Facebook for each moderator contracted.

In Ireland, another hotspot for content moderators, the workers found themselves being called to office, despite Facebook workers being fully remote because of the pandemic. As their status was that of a contractor, each contracting company set its own rules.

Facebook Delivered 10 Billion Impressions from Misleading Pages

Facebook Moderators: PTSD, Stress & More

“Facebook moderators working for one of the company’s Dublin-based contractors are being forced to go into the office, even as Ireland returns to its highest tier of Covid lockdown, after their employer categorised them as essential workers,” reported The Guardian last year.

“Facebook themselves, they are making almost all their employees work from home. Even people working in the same team, on the same project as us – we’re doing the same work – Facebook is letting them work from home and not us,” said one anonymous moderator.

It was the same situation in Hyderabad, India, where 1,600 Facebook moderators worked for yet another third-party contractor, Genpact.

There, as COVID cases surged over the summer, Facebook content moderators were summoned back to the office. Their salaries before COVID amounted to less than $2 an hour and they received in-office meals or prepaid meal cards. Once the pandemic hit, workers said those benefits disappeared, a big hit to their already low incomes.

“I don’t think it’s possible to do the job and not come out of it with some acute stress disorder or PTSD,” said one moderator about the conditions of his job in Arizona, as a Cognizant contractor to Facebook.

Considering those conditions, it’s no wonder Facebook settled a multi-million dollar suit with their moderators, for mental issues developed on the job. As part of that settlement covering 11,250 moderators, each worker would receive a minimum of $1000 as compensation if they developed mental health issues as a consequence of the job.

Facebook AI moderators

For Facebook, that might have been seen as just another operational cost, but the company is making strides into eliminating issues with content moderation.

One solution? Facebook AI moderators, new algorithms to replace a workforce that consistently accuses low pay and high stress.

According to a recent Wall Street Journal report, which reviewed internal Facebook documents, the company doesn’t have enough employees who know the local languages in every country where Facebook has a presence, so they can’t monitor the content there.

With more than 90% of users outside of North America, the situation looks grim, in areas like Ethiopia and Arab-speaking countries, where conflict, ethnic cleansing, human trafficking and drug cartels are a huge problems.

To make matters worse, a former vice president at Facebook told WSJ that the potential harm that this social media platform could have in foreign countries is “simply the cost of doing business” and that “very rarely a significant, concerted effort to invest in fixing those areas.”

So, is AI content moderation the solution? It could be but not right now.

An article that ran in ArsTechnica revealed that “Facebook AI moderator confused videos of mass shootings and car washes”.

“Through the end of 2019, we expect to have trained our systems to proactively detect the vast majority of problematic content,” said Mark Zuckerberg in November 2018, and ever since then the company has relied more and more on automated tools.

However, in Facebook internal documents revealed by whistleblowers and journalists, things are pretty grim. Automated moderators confused cockfights as car washes and mash shootings as paintball games. Text-wise, the company estimates that it can identify just 0.23 percent of the hate speech posted on the platform in Afghanistan.

AI might have been touted as the solution but it’s simply not ready yet.

The unfortunate reality is that, for every minute you scroll through this app, a worker somewhere has to see the worst of humanity. You decide where to go next.

Facebook and Twitter Should Be Held Accountable For Their Algorithms

Follow TechTheLead on Google News to get the news first.