The new iPhone 11 has brought several improvements in terms of photography, among the many other new features it arrives with.

However, it looks like Apple has just begun and it still has plans for the handset. Apple will introduce a new photo processing system called Deep Fusion, of which it will give a taste in the beta versions that will follow in the coming weeks.

Deep Fusion is a new image processing system that uses the computing power of the Apple A13 Bionic processor and its Neural Engine to guarantee better quality for shots taken with compatible devices thanks to machine learning techniques. The software analyzes the photos pixel by pixel, optimizing the details and removing the noise in every part of the frame.

The system aims to specifically improve the photos taken in indoor environments with an intermediate lighting level, all without the user being able to even notice something is going on, since the function is automatically enabled in the background.

In this way, iPhones will have three different techniques depending on the level of ambient light: Smart HDR for bright images, Night Mode for night time shots and Deep Fusion in medium or low light conditions.

Deep Fusion only works with wide-angle and zoom modules, while the ultra-wide angle of the new iPhone will not support either Deep Fusion or Night Mode. In addition to that, Deep Fusion cannot be activated or deactivated manually but it will be a feature that the software will use when it deems it appropriate.

Deep Fusion is a complex process that involves both hardware and software. Even before the user presses the shutter button, the system saves three photographs taken in rapid succession and with very short shutter speeds to block any movement. As soon as you press the shutter button three more photographs are taken with short exposure plus one with a significantly longer exposure time.

All the photos are then merged into a single image via a process that, according to Apple, is different from what happens with Smart HDR. Deep Fusion independently chooses the shot with the most details and merges it with the long shot for color and noise information. Hence, by processing two frames together, it aims for maximum quality with efficiency. The images then go through a four-step procedure where they are analyzed pixel by pixel.

Shooting with the active Deep Fusion mode requires a slightly faster shutter speed than that required by a simple Smart HDR, of about one second; in fact if you press on the preview of the shot in that time frame, iOS 13 will show a temporary image that is then replaced with the version processed with Deep Fusion.

The first Developer Beta with the new shooting mode will arrive shortly and the function will be available for all iPhone 11 owners when iOS 13.2 will be rolling out.

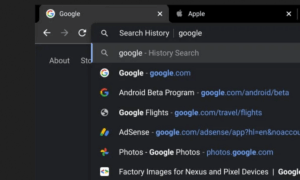

Follow TechTheLead on Google News to get the news first.