In one of the most troubling reports to come out in recent days, the BBC is saying that the Ukrainian authorities are using a controversial facial recognition tool to identify people. Clearview AI was deployed by Ukraine on both dead victims and living people suspected of being on Russia’s side.

The controversial Clearview AI app has few fans in the ranks of privacy and security experts but the Ukrainian authorities had no qualms in accepting it when it was offered free of charge last month. Now, according to the BBC, Clearview AI is being used by Ukrainian authorities to identify dead people and, in some cases, people stopped at checkpoints.

Tech At War: How Technology Is Helping Ukraine Fight Against Russia

The BBC says it saw an email from an Ukrainian agency that confirmed that more than 1,000 living suspects were searched using Clearview.

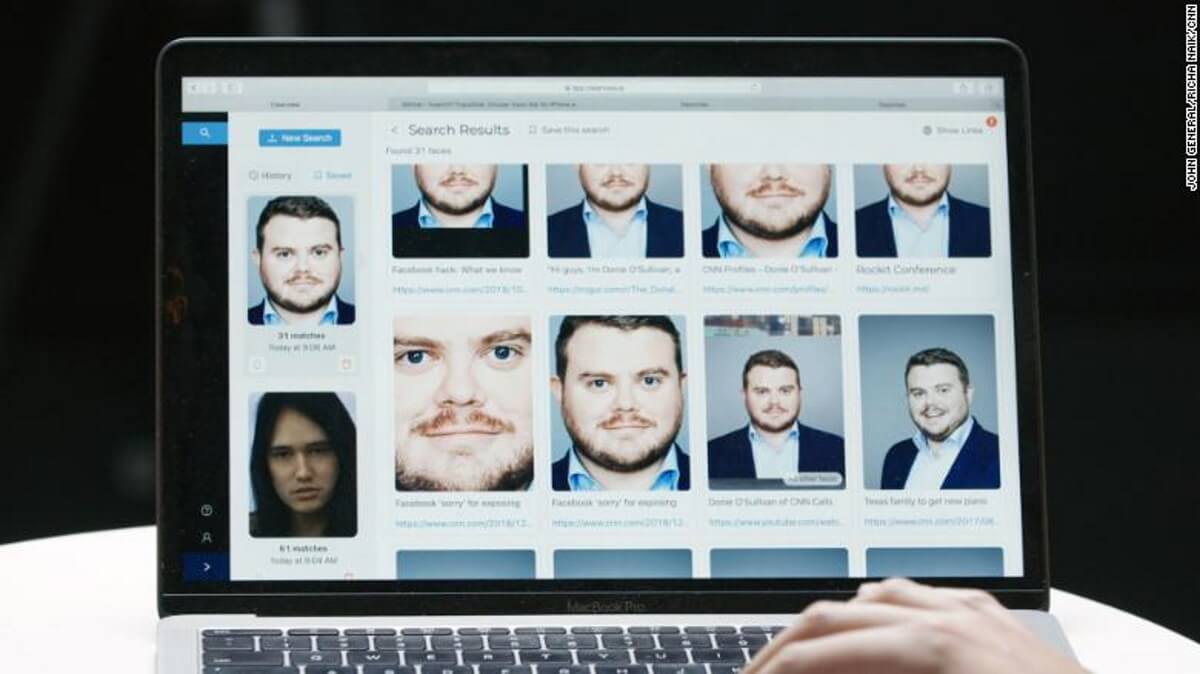

With a simple picture taken of the individual, Clearview AI then scours through its billion-picture database to find matches. It searches through photos obtained on social media; even people without accounts are not safe since other friends can always upload pictures of them.

Clearview AI has been surrounded by controversy since its inception, as the app scraped billions of photos from social platforms like Facebook and Twitter to create “a search engine for faces.”

Most social media platforms, from Facebook to Twitter and even Google, have sent cease-and-desist letters to Clearview to stop them from gathering users’ photos without consent.

Unfortunately, authorities worldwide use Clearwater AI for various procedures, despite the shaky legal ground it stands on.

Canadian’s Office of the Privacy Commissioner (OPC) has ruled it to be an illegal tool. The US Department of Homeland Security, along with the Immigration and Customs Enforcement were both sued by non-profit organizations who say that the public must know what these agencies are doing with this type of technology and why.

These 3 Companies Pulling Out Of Russia Will Have Disastrous Consequences For Their Economy

Albert Fox Cahn from the Surveillance Technology Oversight Project told Forbes that Clearview AI being used in the Ukraine is a “a human rights catastrophe in the making”, and explained his reasoning with troubling clarity:

“When facial recognition makes mistakes in peacetime, people are wrongly arrested. When facial recognition makes mistakes in a war zone, innocent people get shot.”

He also added that “we should be supporting the Ukrainian people with the air defenses and military equipment they ask for, not by turning this heartbreaking war into a place for product promotion.”

For their part, Clearview AI are confident they’re providing valuable tech for the war.

In an interview with Reuters from last month, Clearview CEO Hoan Ton-That said the company stores 10 billion users’ faces scraped from social media, including 2 billion from Russian social media platform Vkontakte.

Contacted by the BBC, Ukrainian authorities said nothing in response to these reports.

Russian Hackers Targeted Ukraine’s Energy Provider

Follow TechTheLead on Google News to get the news first.